Cross Entropy Loss Function - torch.nn.CrossEntropyLoss

Entropy in thermodynamics physics and computer science both means disorder or uncertainty.

A motorcycle engine is a thermodynamic system and the ability to know or control the exact location of a particular drop of gasoline within the gas tank is almost impossible. Gastank droplets are always in disordered, uncertained states.

However, the acceleration of a motorcyle could be controlled dynamically via a dynamic fuel injection system to reduce the uncertainty of the combustion process within the motorcycle engine.

The same thing happens in machine learning model training process where at first the data are mixed randommly into different batches.

By monitoring the Loss Function like Fuel Injection computer monitoring the Motorcycle Engine RPM, the machine learning training neural network rearranges grouping of training data into different classes to reduce The Loss Function output or the Disorder of the training data grouping.

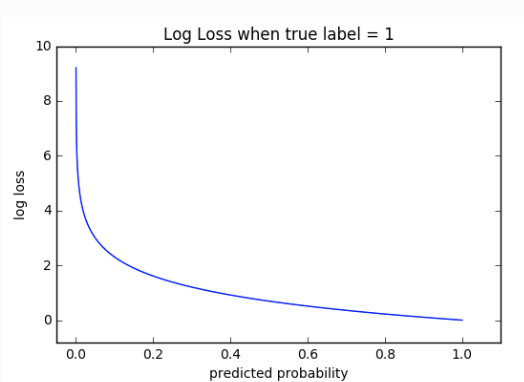

Cross Entropy Loss, or Log Loss, measures the performance of a classification model whose output is a probability value between 0 and 1. Cross-entropy loss increases as the predicted probability diverges from the actual labels

The implementation of Cross Entropy Loss Function in Pytorch is pretty straight forward.

import torch

import torch.nn as nn

loss = nn.CrossEntropyLoss()

input = torch.tensor([0])

print(input)

good_pred = torch.tensor([[0.1, 0.2, 0.3]])

bad_pred = torch.tensor([[0.11, 0.28, 0.34]])

cross_entropy_good = loss(good_pred, input)

cross_entropy_bad = loss(bad_pred, input)

print(cross_entropy_good)

print(cross_entropy_bad)

best_good_pred,_ = torch.min(good_pred, 1)

best_bad_pred,_ = torch.min(bad_pred, 1)

print(best_good_pred, best_bad_pred)

We can see that the cross_entropy_good of good prediction is lower than the cross_entropy_bad of bad prediction.

References

Toward Datata Science - Cross Entropy Loss Function

Softmax function converts logits of neural network outputs into probability distribution predictions.

And Cross Entropy compares the predicted probability distribution to the actual probability distribution.

Pytorch Loss Function Documentation

# Example of target with class indices

loss = nn.CrossEntropyLoss()

input = torch.randn(3, 5, requires_grad=True)

target = torch.empty(3, dtype=torch.long).random_(5)

output = loss(input, target)

output.backward()

# Example of target with class probabilities

input = torch.randn(3, 5, requires_grad=True)

target = torch.randn(3, 5).softmax(dim=1)

output = loss(input, target)

output.backward()

PyTorch Loss Functions: The Ultimate Guide

Other loss functions, like the squared loss, punish incorrect predictions. Cross-Entropy penalizes greatly for being very confident and wrong.

ML Cheatsheet Cross Entropy

Cross-entropy loss increases as the predicted probability diverges from the actual label. So predicting a probability of .012 when the actual observation label is 1 would be bad and result in a high loss value. A perfect model would have a log loss of 0.

Cross-entropy and log loss are slightly different depending on context, but in machine learning when calculating error rates between 0 and 1 they resolve to the same thing.

Paper and Code Focal Loss Function

A Focal Loss function addresses class imbalance during training in tasks like object detection. Focal loss applies a modulating term to the cross entropy loss in order to focus learning on hard misclassified examples. It is a dynamically scaled cross entropy loss, where the scaling factor decays to zero as confidence in the correct class increases.